What’s the Difference Between Distributed & Parallel Computing?

We have witnessed the technology industry evolve a great deal over the years. Earlier computer systems could complete only one task at a time. Today, we multitask on our computers like never before.

With improving technology, even the problem handling expectations from computers has risen. This has given rise to many computing methodologies – parallel computing and distributed computing are two of them.

Although, the names suggest that both the methodologies are the same but they have different working. What are they exactly, and which one should you opt? We’ll answer all those questions and more!

Parallel computing is a model that divides a task into multiple sub-tasks and executes them simultaneously to increase the speed and efficiency.

Here, a problem is broken down into multiple parts. Each part is then broke down into a number of instructions.

These parts are allocated to different processors which execute them simultaneously. This increases the speed of execution of programs as a whole.

Distributed computing is different than parallel computing even though the principle is the same.

Distributed computing is a field that studies distributed systems. Distributed systems are systems that have multiple computers located in different locations.

These computers in a distributed system work on the same program. The program is divided into different tasks and allocated to different computers.

The computers communicate with the help of message passing. Upon completion of computing, the result is collated and presented to the user.

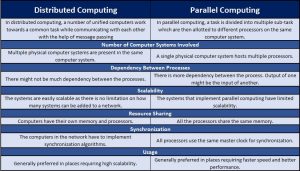

Having covered the concepts, let’s dive into the differences between them:

Parallel computing generally requires one computer with multiple processors. Multiple processors within the same computer system execute instructions simultaneously.

All the processors work towards completing the same task. Thus they have to share resources and data.

In distributed computing, several computer systems are involved. Here multiple autonomous computer systems work on the divided tasks.

These computer systems can be located at different geographical locations as well.

In parallel computing, the tasks to be solved are divided into multiple smaller parts. These smaller tasks are assigned to multiple processors.

Here the outcome of one task might be the input of another. This increases dependency between the processors. We can also say, parallel computing environments are tightly coupled.

Some distributed systems might be loosely coupled, while others might be tightly coupled.

Also Read: Microservices vs. Monolithic Architecture: A Detailed Comparison

In parallel computing environments, the number of processors you can add is restricted. This is because the bus connecting the processors and the memory can handle a limited number of connections.

There are limitations on the number of processors that the bus connecting them and the memory can handle. This limitation makes the parallel systems less scalable.

Distributed computing environments are more scalable. This is because the computers are connected over the network and communicate by passing messages.

In systems implementing parallel computing, all the processors share the same memory.

They also share the same communication medium and network. The processors communicate with each other with the help of shared memory.

Distributed systems, on the other hand, have their own memory and processors.

In parallel systems, all the processes share the same master clock for synchronization. Since all the processors are hosted on the same physical system, they do not need any synchronization algorithms.

In distributed systems, the individual processing systems do not have access to any central clock. Hence, they need to implement synchronization algorithms.

Parallel computing is often used in places requiring higher and faster processing power. For example, supercomputers.

Since there are no lags in the passing of messages, these systems have high speed and efficiency.

Distributed computing is used when computers are located at different geographical locations.

In these scenarios, speed is generally not a crucial matter. They are the preferred choice when scalability is required.

All in all, we can say that both computing methodologies are needed. Both serve different purposes and are handy based on different circumstances.

It is up to the user or the enterprise to make a judgment call as to which methodology to opt for. Generally, enterprises opt for either one or both depending on which is efficient where. It is all based on the expectations of the desired result.

You May Also Like to Read: What are the Advantages of Soft Computing?