The Chinese AI lab DeepSeek has announced the launch of its new reasoning model, DeepSeek-R1, which it claims has outperformed OpenAI’s flagship model, o1, in several critical benchmarks. This development comes shortly after the successful release of DeepSeek-V3, which also demonstrated superior performance compared to models from major tech companies like Meta.

DeepSeek-R1 boasts a mixture-of-experts architecture and is designed to enhance problem-solving and analytical capabilities. The model is available in two versions: DeepSeek-R1-Zero, trained exclusively through reinforcement learning, and the full DeepSeek-R1, which incorporates a multi-stage reinforcement learning process to improve its reasoning abilities and readability.

Impressive Benchmark Performance

DeepSeek-R1 has shown remarkable results in various assessments. In the AIME 2024 mathematics benchmark, it achieved a score of 79.8%, closely rivaling OpenAI’s o1. Additionally, in the MATH-500 benchmark, which consists of complex word problems, DeepSeek-R1 reached an impressive 93% accuracy.

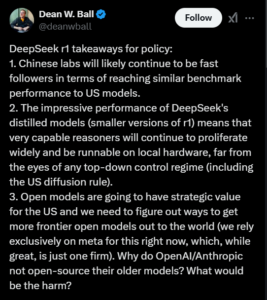

The model also excelled in coding challenges, ranking in the 96.3rd percentile on Codeforces, demonstrating its strong capabilities in software development tasks. Recently in a post on X, Dean W. Ball, an AI researcher at George Mason University, said that the trend suggests Chinese AI labs will continue to be “fast followers.”

Practical Applications and Accessibility

The model’s advanced reasoning skills position it as a valuable tool for educational systems, particularly in tutoring and advanced mathematics. Its proficiency in coding also makes it suitable for software development, especially in code generation and debugging.

DeepSeek-R1 is available as an open-source model under the MIT license on the AI development platform Hugging Face, allowing for unrestricted commercial use. The company claims that accessing R1 is 90-95% cheaper than using OpenAI’s o1, making it a cost-effective option for developers and researchers.

Regulatory Considerations

However, as a Chinese-developed model, DeepSeek-R1 is subject to oversight by China’s internet regulators, which may restrict its responses to sensitive topics. This regulatory environment raises questions about the model’s operational scope and the nature of its outputs.

With this launch, DeepSeek joins a growing list of Chinese labs, including Alibaba and Kimi, that are producing competitive AI models. As the race for AI supremacy continues, DeepSeek-R1’s introduction marks a significant milestone in the evolving landscape of artificial intelligence.

Recommended For You:

OpenAI Introduces GPT-4o, a Faster and Free Model for all ChatGPT Users