Ensemble learning is a smart way to increase the accuracy and dependability of machine learning models. By combining the advantages of many models, it minimizes errors and improves productivity. This method is applied to tasks like prediction and data categorization. Ensemble learning has a wide range of practical applications, from diagnosing diseases to detecting fraud.You will explore the fundamentals of ensemble learning methods in this guide and also their applications in many sectors to tackle challenging issues.

What is Ensemble Learning?

To produce a more reliable and accurate prediction model, many machine learning models are combined in a process known as ensemble learning. The major objective is to use the combined capabilities of several models to lower mistakes and enhance performance.

Ensemble learning is based on the idea that the constraints of individual models may be addressed by combining the predictions of other models. In contrast to single-model techniques, ensemble learning reduces the impact of bias and volatility. Although some models may be biased and others may have a large variance, their combination produces forecasts that are more accurate and have a better balance.

In many machine learning applications, such as classification, where ensemble models assist in classifying data points, and regression, where ensemble learning forecasts continuous values, this method is frequently employed. To improve the precision and dependability of models used for risk assessment, diagnostics, and fraud detection, ensemble learning is utilized in industries such as cybersecurity, healthcare, and finance.

Ensemble models may be divided into two categories: heterogeneous ensembles, which incorporate several model types, and homogeneous ensembles, which employ the same basic models. Through the provision of varied viewpoints during the prediction process, both strategies provide notable enhancements over individual models.

Types of Ensemble Learning Models

Homogeneous Ensembles

The same underlying model is used in homogeneous ensembles, but distinct subsets of data or hyperparameters are used for each instance. The main benefit of this method is that it averages out the faults of each individual model, which lowers variance.

Applying bagging (Bootstrap Aggregating) to decision trees, Random Forest is one of the most used homogenous ensemble techniques. The final prediction in a Random Forest is determined by the average prediction for regression tasks or the majority vote for classification tasks, with each tree being trained on a distinct portion of the data. As a result of less overfitting, improved generalization is guaranteed.

Heterogeneous Ensembles

Diverse model types are used to create heterogeneous ensembles. These models might be anything from decision trees to neural networks to support vector machines, making sure that the integrated system captures various facets of the data.

Stacking, which involves training many base models on the same dataset, is a well-liked heterogeneous ensemble technique. Following that, their predictions are given into a meta-model, which generates the final forecast by combining the outputs of the basic models.

Ensemble Learning Methods

There are two broad techniques of ensemble learning, commonly known as simple and advanced learning methods.

Simple Ensemble Learning Methods

1. Max Voting

In a classification task, max voting is used to select the class with the most votes as the final prediction after each model has predicted a class. When the majority of models exhibit comparable performance, this approach works well.

2. Averaging

In regression problems, averaging is utilized to get the final prediction by averaging the predictions from several different models. This method makes forecasts smoother and more accurate by lessening the effect of individual mistakes.

3. Weighted Averaging

Different models are given varying weights according to their dependability or performance in weighted averaging. To ensure that models with higher performance contribute more to the outcome, the weighted average of all forecasts is used to generate the final prediction.

Advanced Ensemble Learning Methods

1. Bagging (Bootstrap Aggregating)

Using different random subsets of data, each created by sampling with replacement, to train several models is known as bagging. The final prediction is determined by obtaining a majority vote for classification or averaging the models’ outputs for regression after they have been trained individually. Decision trees and other high-variance models benefit from bagging’s ability to minimize variance.

2. Boosting

In the sequential process known as “boosting,” each model concentrates on fixing the mistakes of the one before it. Over time, accuracy is increased via boosting algorithms, which give misclassified occurrences greater weight. Reducing prejudice and developing strong learners from poor ones are two very successful uses of boosting.

3. Stacking and Blending

In stacking and blending, predictions from many base models are combined, and a meta-model then bases its final forecast on their outputs. Blending employs a validation set to train the meta-model, whereas stacking trains all models on the same data.

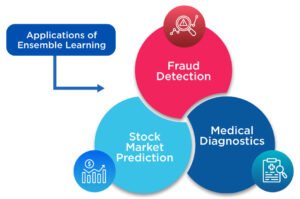

Applications and Use Cases of Ensemble Learning

Fraud Detection: Ensemble approaches reduce false positives and reliably identify fraudulent actions in the financial industry by evaluating transaction patterns across different models.

Medical Diagnostics: Ensemble learning combines the results of many machine learning models to improve diagnosis accuracy in the healthcare industry. This is particularly helpful when using patient data or medical imagery to identify disorders.

Stock Market Prediction: To provide more accurate forecasts, ensemble models are used in finance to combine predictions from several models to forecast stock values.

Employ Ensemble Learning for Smarter Solutions!

In machine learning, ensemble learning demonstrates the value of collaboration as models cooperate to provide superior outcomes. These models combine their capabilities to provide more accurate and dependable forecasts. Ensemble learning methods can address significant problems, such as identifying fraud, enhancing medical diagnosis, or predicting market values.

Understanding how to apply ensemble approaches will be essential to creating more intelligent solutions as technology advances. This guide gives you the fundamental information you need to begin incorporating ensemble learning methods into your own projects.

For more insightful and technology-related content, visit us at HiTechNectar.

Also Read:

A Combination of IoT and Condition Monitoring

An Overview of Machine Learning. What can machine learning be used for?