Picture a basketball team where players gradually learn their roles like passing, shooting, defending and adjusting to each other’s strengths and weaknesses until they function as a unit. Now imagine the same process happening with software agents instead of humans. That’s what happens in multi-agent reinforcement learning.

Here multiple digital entities learn to navigate shared environments, sometimes working together, sometimes competing, but always adapting. These systems learn and develop sophisticated behaviors when interacting with each other. Agents develop their own communication protocols and exhibit deceptive tactics against competitors. The outcome then develops organically through trial and error rather than explicit coding.

What is Multi-Agent Reinforcement Learning (MARL)?

Multi-agent reinforcement learning is the next step up from a single-agent reinforcement learning framework in the traditional framework. MARL introduces several decision-makers into the mix, as opposed to a single agent investigating an area and discovering the best behaviors through reward signals.

These agents face a problem while simultaneously learning policies: other agents are also changing their behaviors, making the environment non-stationary from each agent’s point of view.

It is impossible to overestimate the importance of MARL in today’s intelligent systems. Consider robot teams cooperating in warehouse operations, trading algorithms vying for market share in financial markets, or autonomous cars navigating congested junctions. To address these situations, MARL offers algorithmic techniques and mathematical foundation.

Collaboration vs. Competition: Dynamics of MARL

Agents work together in cooperative MARL environments to achieve objectives. With team incentives, when each agent receives similar feedback signals, these environments tend to encourage conduct that is positively associated with the group as a whole. The credit assignment problem, that is, determining which agent’s efforts led to success while everyone receives the same reward, presents the technical difficulty in this case.

Competitive environments, on the other hand, set agents against each other in zero sum or general sum games where one’s gain often means another’s loss. These settings naturally push agents toward increasingly sophisticated strategies through an emergent arms race. Minimax algorithms, counterfactual regret minimization, and opponent modeling become crucial as agents must anticipate rivals’ moves several steps ahead.

Mixed environments blend cooperative and competitive elements, creating perhaps the most realistic and challenging scenarios. In those contexts, agents must negotiate complex social interactions, deciding when to compete for their advantage and when to cooperate for common benefit. Technical approaches often apply intrinsic motivation mechanisms or hierarchies of incentives to balance multiple objectives.

Well known MARL Algorithms and Frameworks–

MADDPG (Multi-Agent Deep Deterministic Policy Gradient)

It extends the actor-critic architecture to multi-agent settings with a clever twist during training, each agent’s critic has access to all agents’ observations and actions, while actors remain decentralized.

QMIX

It tackles the problem of value function factorization in cooperative settings by maintaining individual Q-values for each agent while mixing them together through a monotonic network architecture.

COMA (Counterfactual Multi Agent)

By comparing actual rewards against this counterfactual, agents can better understand their individual contribution to team success, cutting through the noise of other agents’ actions to find their own signal.

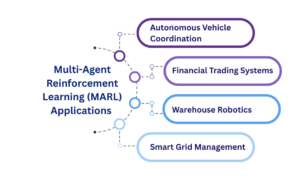

Discovering the Applications of MARL–

Without specific traffic regulations, MARL allows entire fleets to cooperate at crossings, merging lanes, and parking situations in addition to single-vehicle navigation. These systems make use of strategies like social value orientation and intention prediction.

Financial Trading Systems

By modeling market microstructure and participant behaviors through recurrent neural networks and transformer architectures, these systems can anticipate market reactions to large orders and optimize execution strategies accordingly.

Smart Grid Management

Power networks use MARL to coordinate distributed energy resources, solar panels, batteries, and flexible loads creating virtual power plants that respond to demand fluctuations.

Warehouse Robotics

These systems dynamically allocate tasks and navigation priorities through market-based mechanisms where robots “bid” on assignments based on position and capability, creating emergent efficiency without centralized control.

The Evolving Role of MARL–

A fascinating range of agent behaviors that frequently resemble human social dynamics are produced by the interaction between cooperation and competition in MARL. Cooperative settings encourage specialization and implicit coordination, while competitive environments drive strategic innovation through evolutionary pressure.

The most compelling research sits at the intersection where agents must learn when to cooperate for mutual benefit and when to compete for individual advantage. This balance between selfishness and altruism, between short-term gains and long-term relationships, makes MARL a computational exploration of social intelligence.

Conclusion

By moving away from intentionally engineered behaviors and toward emergent intelligence that adjusts to changing circumstances, MARL has the potential to revolutionize the way we construct complex systems in the future. The future belongs to adaptive collectives that strike a balance between individual autonomy and collective intelligence and not to a single superintelligent entity.

For the latest updates on concepts like Multi-Agent Reinforcement Learning, keep visiting us at HiTechNectar.

Also Read:

How AI Agents and Assistants Shape Our World Differently

Virtual Agents and the new AI Revolution in Contact Center