Two terms in machine learning, i.e., model Hyperparameter vs. Parameter, are often confused with. Here, we will go over both the terms and take a quick look at the differences between the two.

The primary aim of machine learning is to create a model for a given data set. This data set is then used to predict labels or dependent labels. To perfect this prediction, ML models need optimization algorithms during the training period.

A model parameter is a variable whose value is estimated from the dataset. Parameters are the values learned during training from the historical data sets.

The values of model parameters are not set manually. They are estimated from the training data. These are variables, that are internal to the machine learning model.

Based on the training, the values of parameters are set. These values are used by machine learning while making predictions. The accuracy of the values of the parameters defines the skill of your model.

In case a model has a fixed number of parameters, the system is called “parametric.” On the other hand, “non-parametric” systems do not have a fixed number of parameters.

These systems assume that the data distribution cannot be defined in a fixed set of parameters.

Co-efficient in a linear or logical regression and weights in neural networks are examples of model parameters.

A hyperparameter is a configuration variable that is external to the model. It is defined manually before the training of the model with the historical dataset. Its value cannot be evaluated from the datasets.

It is not possible to know the best value of the hyperparameter. But we can use rules of thumb or select a value with trial and error for our system.

Hyperparameters affect the speed and accuracy of the learning process of the model. Different systems need different numbers of hyper-parameters. Simple systems might not need any hyperparameters at all.

Learning rate, the value of K in k-nearest neighbors, and batch-size are examples of hyper-parameters.

Let’s understand the difference between parameter and hyper-parameter with the help of an example.

When you are, say, learning to drive, you have to go through learning sessions with an instructor teaching you.

Here, you are getting trained with the instructor’s help. The instructor helps you through the lessons. S/he helps you practice driving until you are confident and capable enough to drive on the road alone.

Once trained and you are able to drive, you would not need the instructor to train you. In this scenario, the instructor is the hyper-parameter and the student the parameter.

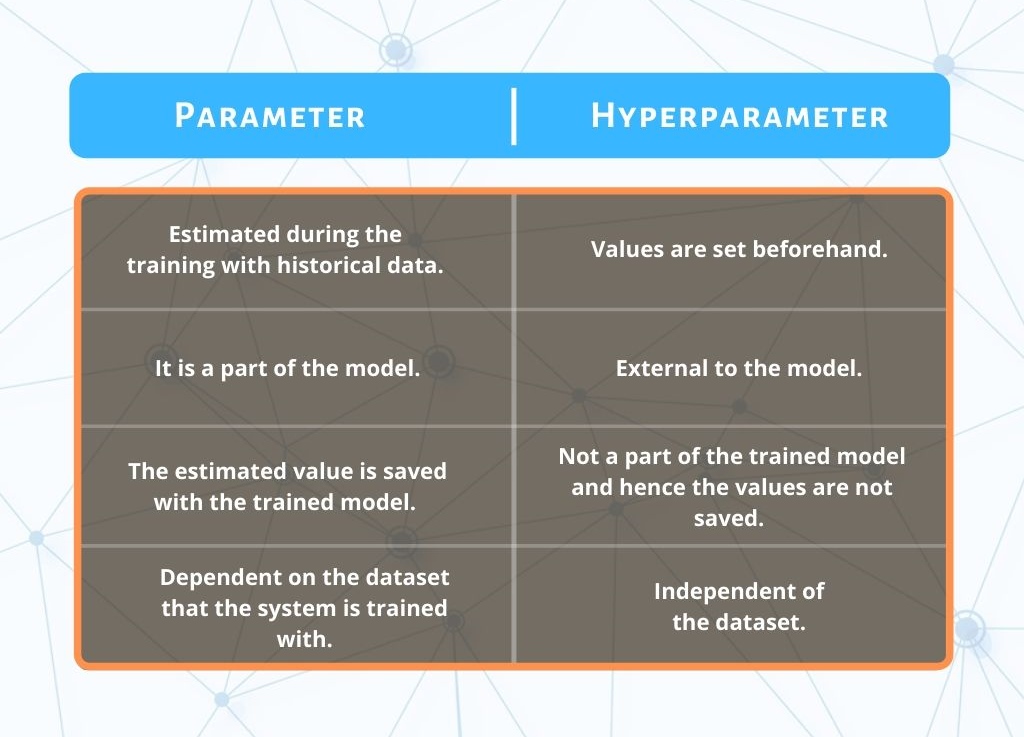

As seen earlier, parameters are the variables that the system estimates the value of during the training.

The values of hyper-parameters are pre-set. The values of hyper-parameters are independent of the dataset. These values do not change during training.

Hyper-parameter is not a part of the trained or the final model. The values of model parameters estimated during training are saved with the trained model.

The hyper-parameter values are used during training to estimate the value of model parameters.

Hyper-parameters are external configuration variables, whereas model parameters are internal to the system.

Since hyper-parameter values are not saved, the trained or final models are not used for prediction. Model parameters, however, are used while making predictions.

An easy rule of thumb, to not get confused between the two terms would be to remember that if you have to specify the value beforehand, it is a hyperparameter.

Now that we have gone through both, it should be clear that the definition and usage of both the terms are different.

You May Also Like to Read:

MXNet vs PyTorch: Comparison of the Deep Learning Frameworks