There has never been a greater need for effective summary. With over 500 hours of video posted to YouTube every minute and almost 7.5 million blog pieces produced every day, we are overloaded with content. What started as academic research in the 1950s has evolved into sophisticated algorithms that can read thousands of documents in seconds and extract their essence.

Text summarization, one of natural language processing’s most practical applications is scaled up to handle everything from news articles to legal documents. Whether you’re a developer looking to implement these techniques or just someone curious about how your news app creates those perfect snippets, this guide breaks down everything worth knowing about text summarization in NLP.

What Is Text Summarization for NLP?

Text summarization is basically computational bartending – mixing vast amounts of linguistic content and serving up just the potent stuff. It’s the process by which algorithms condense lengthy texts into more manageable chunks while maintaining important details and the main idea.

Traditional summarization relies on human judgment. NLP-based approaches leverage computational linguistics, statistical methods, and increasingly, deep learning architectures to automate this process. The algorithms identify salient sentences, recognize redundancies, and stitch together the most significant parts based on semantic understanding, co-reference resolution, and discourse analysis.

Good summarization needs to understand entities and their relationships, parse complex sentence structures, and sometimes even grasp subtle nuances like sarcasm or metaphor. The field has progressed from early frequency-based techniques to advanced transformer topologies, such as T5 and BART, that can produce abstractions that mimic summaries created by humans.

Types Of Automatic Text Summarization

When it comes to automatic text summarization, two primary types stand out:

Extractive summarization works like a highlighter-wielding graduate student, identifying and pulling out existing sentences verbatim from the source material. These algorithms rank sentences based on features like term frequency-inverse document frequency (TF-IDF), sentence position, presence of named entities, and semantic similarity to the document’s central themes.

Popular algorithms like TextRank and LexRank build graph representations of sentences and use modified PageRank algorithms to determine centrality. While extractive methods can sometimes produce choppy summaries with coherence issues, they’re computationally efficient and less prone to factual errors since they only use original text.

Abstractive summarization channels your creative friend who reads a book and retells it in their own words. These systems generate entirely new text that captures the essence of the original content, using different phrasing altogether. It happens through sequence-to-sequence neural architectures enhanced with attention mechanisms, leveraging transformers like BART, T5, or PEGASUS.

Abstractive models can compress information more efficiently and produce more fluent, human-like summaries, but they come with their own challenges. They might hallucinate facts do not present in the source, struggle with very long documents, or miss key details. Recent approaches like pointer-generator networks attempt to get the best of both worlds by copying important phrases directly from the source while generating novel connecting text.

NLP Text Summarization Techniques

Text summarization’s historical foundation is made up of statistical-based methods that use TF-IDF scoring, phrase frequencies, and sentence location heuristics to pinpoint key information. Compared to modern neural algorithms, these techniques may appear archaic, yet they are effective and computationally economical for texts such as news stories.

Graph-based techniques like TextRank treat sentences as nodes in a network, with edges representing semantic similarity between them. The algorithm then applies modified PageRank calculations to identify the document’s essence – a clever way of finding the “influencers” in a text without needing massive training data.

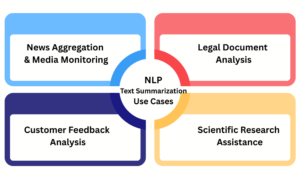

NLP Text Summarization Use Cases

News Aggregation and Media Monitoring: Sophisticated summarization pipelines are used by news systems such as Apple News and Google News to distill thousands of articles into easily readable chunks. Their algorithms balance extractive techniques for factual accuracy with abstractive techniques that create attention-grabbing headlines.

Legal Document Analysis: In order to process contracts, case law, and discovery papers that might normally take hundreds of billable hours, legal firms are increasingly using specialized summarization systems. To find important sentences, precedents, and possible liabilities, these systems frequently combine entity recognition with domain-specific extraction models.

Scientific Research Assistance: To rapidly evaluate findings and significance, researchers employ summarization techniques tailored to their field. Citation networks and technical vocabulary weighting are two features that these customized models use to draw attention to important findings and methodological advancements from papers that could otherwise go overlooked.

Customer Feedback Analysis: Product teams at companies like Amazon and Samsung utilize aspect-based summarization to digest thousands of reviews into actionable insights. Rather than simple sentiment analysis, these systems extract specific product features mentioned across reviews and generate concise summaries of user experiences with each component.

Conclusion

Imagine summarizing material that adapts to your unique knowledge gaps, leaving out what you already know and adding context as needed. The real innovation will happen when these technologies are able to distill the key points from that three-hour podcast, place them in the context of pertinent research articles, and apply them to current affairs. As processing power increases and models gain deeper semantic understanding, the line between summarizing and comprehension will blur.

For the latest updates on NLP Text Summarization and more, keep visiting us at HiTechNectar.

Also Read:

Know about NLP language Model comprising of scope predictions of IT Industry

Understanding Sentiment Analysis: A Complete Guide to NLP Techniques